How to Benchmark Harddrives in Linux

So you have some new harddrives. Maybe you are thinking about building a DIY storage server? Maybe some Raid? Maybe you just want to know if your drives are performing as well as when you bought them? How can you know? Measurement is Knowing.

The thing about benchmarks is that you always must be skeptical. Each system’s particular disks, controller, raid level and settings, cpu, ram, filesystem, operating system, etc can GREATLY affect the performance of a system. The only way to know is to do it yourself.

_1. Super Easy Block Level Read Test: hdparm _ This particular “benchmark” is easiest and the least reliable. It does raw reads only, good luck on where it pulls them from. Here is an example:

root@archive:/# hdparm -tT /dev/sda

/dev/sda:

Timing cached reads: 1472 MB in 2.00 seconds = 735.81 MB/sec

Timing buffered disk reads: 360 MB in 3.02 seconds = 119.24 MB/sec

Its… something. Good for just real quick, non-destructive read tests to compare between two disks or arrays.

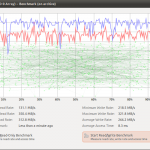

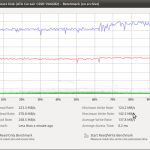

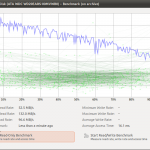

2. Better Block Level Read and Write Tests: palimpsest Palimpsest operates on a block device. It has nothing to do with files and filesystems like hdparm. If performed on a whole disk you can see the how the drive slows down as the head approaches the interior of the spindle. It also does seek tests.

You can run “palimpsest” on the command line, or in a gnome environment go System->Administration -> Disk Utility. Modern versions of the program can also connect to remote servers which may not have a gui. It can also be X-forwarded from remote servers.

Here is a gallery of some examples:

Notice the slow writes on the raid 5 (second picture), and the small deviation on seeks on the SSD (forth picture). Also you can see how spinning disks slow down as the approach the center of the spindle. (fifth picture)

3: Real World Filesystem Benchmarks: Bonnie++ In some way, the above benchmarks are more theoretical than what would be ideal. Unless you have a special application, you are like the rest of us: you work on files.

Working with normal files means you interact with a filesystem. Working on a filesystem means you have block sizes, extents, permissions, fragmentation, etc. All of these additional complexities need to be measured.

The solution here is bonnie++, which does what most applications do: write, read, seek, create, and delete files sequentially and randomly.

Here would be a typical invocation:

kyle@archive:/tmp# bonnie++

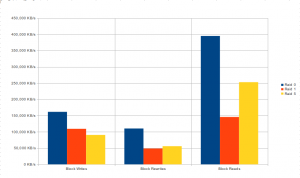

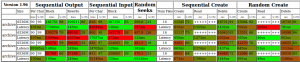

It is better to run it as not root. Run it in the directory where you want it to make files. Its output is… a little hard to comprehend and outside the scope of this article. One can read the documentation and compile your own spreadsheet and graph with some Libreoffice Calc foo:

Nothing too fancy. It has a lot of output, so you have to pick the numbers that are important to you. Another option is to use the bon_csv2html to output a slightly more readable output:

cat benchmarks.csv | bon_csv2html > bench.html

firefox bench.html

Bonnie benchmarks are the hardest to read, but are they closest to the reality of the performance of your disk in your environment.

Conclusion

So that is it. Try out different raid configurations and filesystems, but benchmark them to know if it improves or degrades your performance instead of depending on hunches and superstition!

Comment via email